|

Ting-Chun Wang Principal Research Scientist NVIDIA Santa Clara, CA |

||

|

||

|

|

||

|

|

|||||||||||

| I'm a principal research scientist at the Deep Imagination Research (DIR) group at NVIDIA, working on computer vision, machine learning, and computer graphics. |

News

Check out our Cosmos-Predict1 and Cosmos-Transfer1 papers on the project website!

I am serving as an area chair at CVPR and ICLR 2025.

Selected Publications

|

NVIDIA: Ting-Chun Wang (core contributor) et al.

arXiv, 2025

|

|

NVIDIA: Ting-Chun Wang (core contributor) et al.

arXiv, 2025

|

|

Yu Zeng, Vishal M. Patel, Haocheng Wang, Xun Huang, Ting-Chun Wang, Ming-Yu Liu, Yogesh Balaji

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2024

|

|

Johanna Karras, Aleksander Holynski, Ting-Chun Wang, Ira Kemelmacher-Shlizerman

International Conference on Computer Vision (ICCV), 2023

|

|

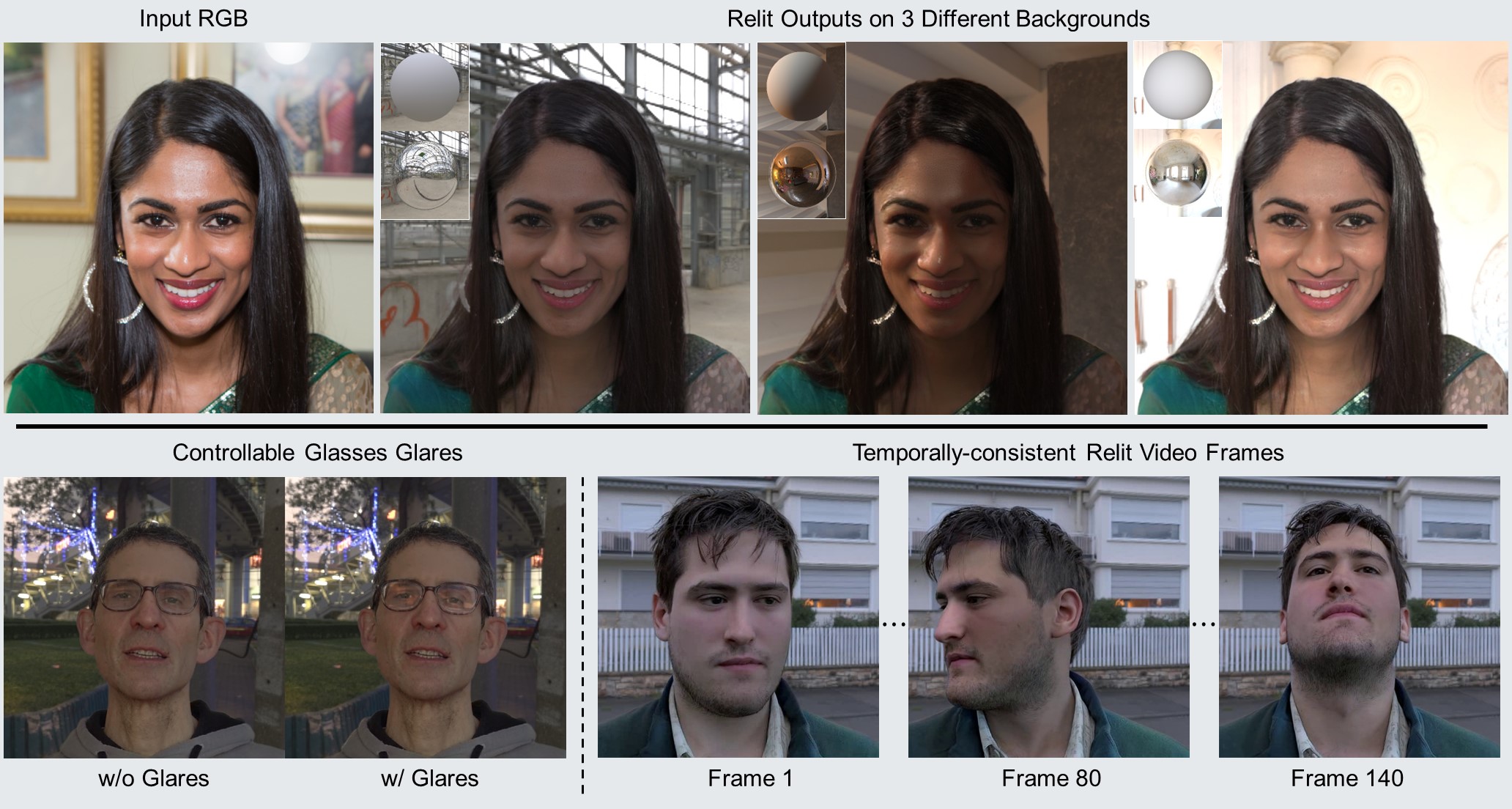

Siddharth Gururani, Arun Mallya, Ting-Chun Wang, Rafael Valle, Ming-Yu Liu

International Conference on Computer Vision (ICCV), 2023

|

|

Arun Mallya, Ting-Chun Wang, Ming-Yu Liu

Conference on Neural Information Processing Systems (NeurIPS), 2022

|

|

Tim Brooks, Janne Hellsten, Miika Aittala, Ting-Chun Wang, Timo Aila, Jaakko Lehtinen, Ming-Yu Liu, Alexei A. Efros, Tero Karras

Conference on Neural Information Processing Systems (NeurIPS), 2022

|

|

Yu-Ying Yeh, Koki Nagano, Sameh Khamis, Jan Kautz, Ming-Yu Liu, Ting-Chun Wang

ACM Transactions on Graphics (SIGGRAPH Asia), 2022

|

|

Xun Huang, Arun Mallya, Ting-Chun Wang, Ming-Yu Liu

European Conference on Computer Vision (ECCV), 2022

|

|

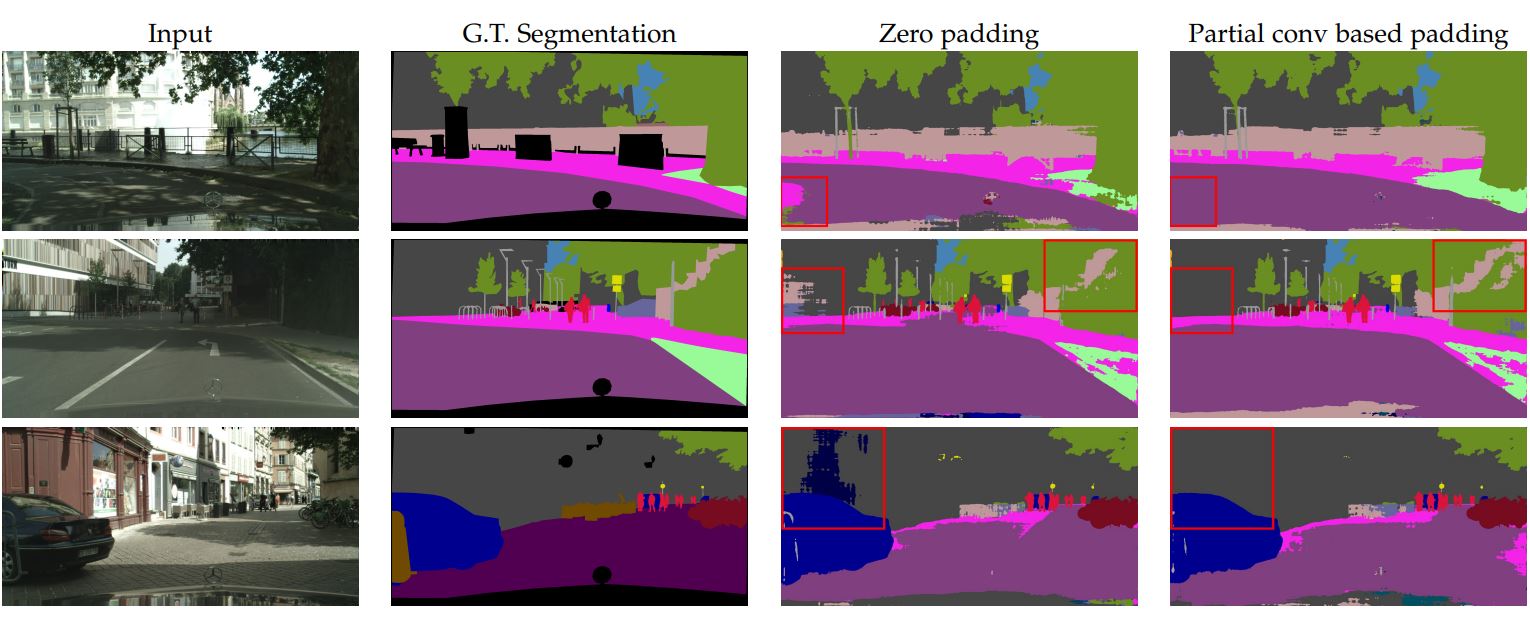

Guilin Liu, Aysegul Dundar, Kevin J. Shih, Ting-Chun Wang, Fitsum A. Reda, Karan Sapra, Zhiding Yu, Xiaodong Yang, Andrew Tao, Bryan Catanzaro

Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2022

|

|

Ting-Chun Wang, Arun Mallya, Ming-Yu Liu

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021 (oral presentation)

|

|

Guilin Liu, Rohan Taori, Ting-Chun Wang, Zhiding Yu, Shiqiu Liu, Fitsum A. Reda, Karan Sapra, Andrew Tao, Bryan Catanzaro

ACM Transactions on Graphics, 2021 (provisionally accepted)

|

|

Ming-Yu Liu, Xun Huang, Jiahui Yu, Ting-Chun Wang, Arun Mallya

Proceedings of The IEEE, 2021

|

|

Arun Mallya*, Ting-Chun Wang*, Karan Sapra, Ming-Yu Liu (*equal contribution)

European Conference on Computer Vision (ECCV), 2020

|

|

Aysegul Dundar, Ming-Yu Liu, Zhiding Yu, Ting-Chun Wang, John Zedlewski, Jan Kautz

Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2020

| |

|

Ting-Chun Wang, Ming-Yu Liu, Andrew Tao, Guilin Liu, Jan Kautz, Bryan Catanzaro

Conference on Neural Information Processing Systems (NeurIPS), 2019

|

|

Hsin-Ying Lee, Xiaodong Yang, Ming-Yu Liu, Ting-Chun Wang, Yu-Ding Lu, Ming-Hsuan Yang, Jan Kautz

Conference on Neural Information Processing Systems (NeurIPS), 2019

|

|

Taesung Park, Ming-Yu Liu, Ting-Chun Wang, Jun-Yan Zhu

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019 (best paper finalist)

|

|

Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Guilin Liu, Andrew Tao, Jan Kautz, Bryan Catanzaro

Conference on Neural Information Processing Systems (NeurIPS), 2018

|

|

Guilin Liu, Kevin J. Shih, Ting-Chun Wang, Fitsum A. Reda, Karan Sapra, Zhiding Yu, Andrew Tao, Bryan Catanzaro

arXiv preprint arXiv:1811.11718

| |

|

Aysegul Dundar, Ming-Yu Liu, Ting-Chun Wang, John Zedlewski, Jan Kautz

arXiv preprint arXiv:1807.09384

|

|

Guilin Liu, Fitsum A. Reda, Kevin J. Shih, Ting-Chun Wang, Andrew Tao, Bryan Catanzaro

European Conference on Computer Vision (ECCV), 2018

|

|

Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, Bryan Catanzaro

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018 (oral presentation)

|

|

Ting-Chun Wang

PhD Thesis, 2017

|

Professional Activities

Tutorial/Workshop Co-organizer

CVPR 2023: AI for Content Creation Workshop

CVPR 2022: AI for Content Creation Workshop

ECCV 2020: Tutorial on Accelerating Computer Vision with Mixed Precision

ICCV 2019: Tutorial on Accelerating Computer Vision with Mixed Precision

ICIP 2019: Tutorial on Image-to-Image Translation

Conference Area Chair: CVPR, ECCV, ICLR, WACV

Conference Reviewer: CVPR, ICCV, ECCV, SIGGRAPH, SIGGRAPH Asia, Eurographics, NeurIPS, ICLR, ICRA

Journal Reviewer: TPAMI, IJCV, TOG, TIP, TMM, TVCG, TCSVT, CVIU

Awards

Best in Show Award and Audience Choice Award, RealTimeLive, SIGGRAPH 2019

Best Paper Finalist, CVPR 2019

1st place, Domain Adaptation for Semantic Segmentation Competition, WAD Challenge, CVPR 2018

NTECH Best Paper Award, NVIDIA 2018

Pioneer Research Award, NVIDIA 2018

Software

Imaginaire: a common codebase for training generative models.

Few-shot vid2vid: Few-shot video-to-video translation.

SPADE: Semantic image synthesis on diverse datasets including Flickr and coco.

vid2vid: High-resolution video-to-video translation.

pix2pixHD: High-resolution image-to-image translation.

Talks

Face-vid2vid: One-Shot Free-View Neural Talking-Head Synthesis for Video Conferencing

CVPR (2021)

Few-Shot Video-to-Video Synthesis

ICCV Workshop on Advances in Image Manipulation (2019)

Mixed-Precision Training for pix2pixHD

ICCV Tutorial on Accelerating Computer Vision with Mixed Precision (2019)

ICIP Tutorial on Image-to-Image Translation (2019)

CVPR Workshop on Deep Learning for Content Creation (2019)

High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs (pix2pixHD)

CVPR (2018)

Beyond Photo-Consistency: Shape, Reflectance, and Material Estimation Using Light-Field Cameras

Dissertation talk (2017)